December brought a flurry of search industry developments from the Google camp. Below, we break down the key updates and their implications for publishers and digital marketers.

Google Search

December spam updates

December brought a significant spam update — the third of 2024.

Released on December 19th and completed by the 26th, this update targeted spam content on a global scale, with widespread reports of ranking and deindexing issues — Merry Christmas from Google.

Google provided little insight into the specific spam policies or algorithms updated, simply encouraging those affected to review spam policies and ensure compliance. It was also mentioned that recovery could take months.

Read Google’s full statement below:

‘While Google's automated systems to detect search spam are constantly operating, we occasionally make notable improvements to how they work. When we do, we refer to this as a spam update and share when they happen on our list of Google Search ranking updates.

For example, SpamBrain is our AI-based spam-prevention system. From time-to-time, we improve that system to make it better at spotting spam and to help ensure it catches new types of spam.

Sites that see a change after a spam update should review our spam policies to ensure they are complying with those. Sites that violate our policies may rank lower in results or not appear in results at all. Making changes may help a site improve if our automated systems learn over a period of months that the site complies with our spam policies.’

For publishers…

This means maintaining strict adherence to Google’s spam policies is essential. Audit your site for spam indicators - low-quality content, unnatural link practices, misleading metadata, etc. - to avoid potential penalties and expedite recovery if affected.

November & December core updates

Not only did the November core update finish rolling out (see our full analysis) in December; we got another almost immediately after.

The December core update, launched on December 12th and completed in just six days, was the fastest core update to date. Released globally, it targets all content types and, judging by the ensuing rank volatility, appears to have been more severe than the November update.

Average rank fluctuation was 2.8 SERP positions — compared to the November core updates fluctuation score of 2.4.

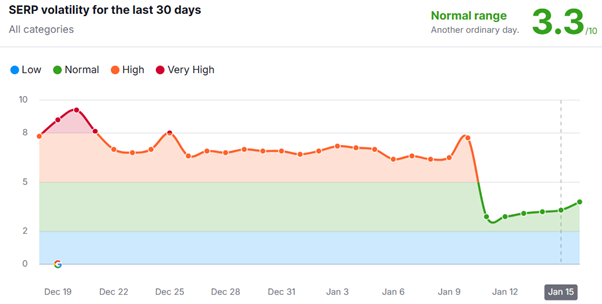

As you can see from the Semrush volatility sensor chart above, the SERPs have started to cool off in the UK, down from a significant peak the day after the update wrapped up.

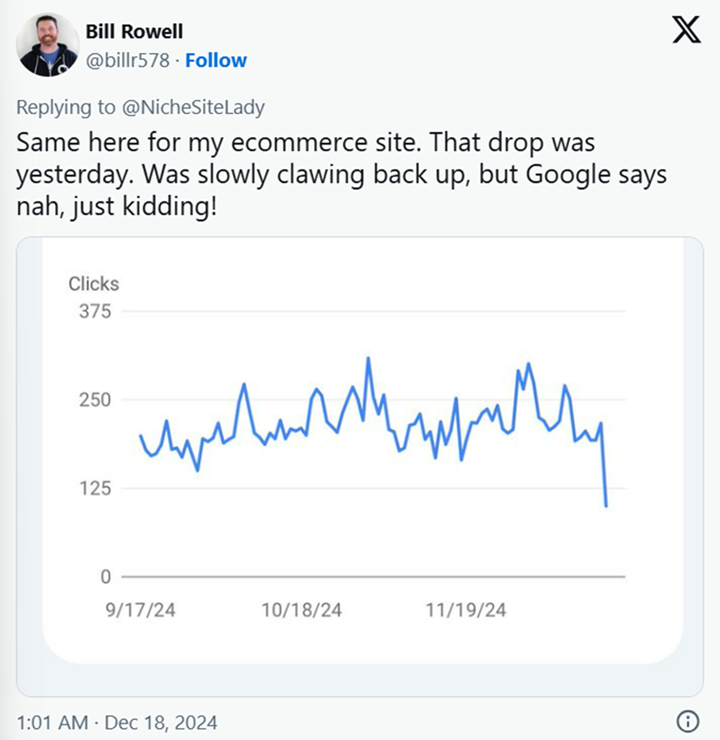

Many HCU sites that saw some recovery as a direct result of the March 2024 core algorithm update reported a return to post HCU lows.

Source: SERoundtable

Source: SERoundtable

For others, it was a welcome refresh, with some publishers and SEOs reporting a return to form after being hit by the November 2024 update.

Google hasn’t released any specifics — but did offer,

‘If you’re wondering why there’s a core update this month after one last month, we have different core systems we’re always improving.’

This implies that the updates were unrelated in a technical sense - but as they both had a broad reach and impacted all content types, it’s safe to assume that, despite being “different” core systems, they are symbiotic to some degree - or at least overlap in terms of influence.

For publishers…

This means, as we discuss in more detail below, the pace of algorithm updates is quickening, so regular technical audits and high-quality content production are critical. Prepare for continued turbulence by monitoring traffic patterns and diversifying your traffic sources.

Google core updates to be released more frequently

At Google Search Central Live in Zurich, Google announced plans to increase the frequency of core updates. It’s unclear how frequent they will become, or if it will be a rolling update situation, with releases occurring back-to-back.

It may be that the updates will be smaller in scope, collectively covering the same ground as the 4 larger updates per year we’re accustomed to.

With some big changes for Search on the horizon, it stands to reason that Google would want constant control as these changes go live, so this move may be aimed at making troubleshooting and problem-solving more manageable for Google.

Many publishers are concerned that they will be unable to weather more frequent updates. Others see it as a good thing, forecasting more opportunities to recover post-update visibility quickly — and for Google to improve their Search ecosystem.

For publishers…

Theoretically, more updates will mean the associated rank volatility will be near constant, especially throughout the lower SERP positions. More dynamic SERPs will likely require publishers to stay more focused on long-term strategies rather than chasing short-term ranking gains.

Maintaining high-quality content that meets user intent, along with adhering to Google's core web vitals and E-A-T principles (Expertise, Authoritativeness, and Trustworthiness), will remain critical for success.

Additionally, publishers may need to invest in tools and talent that can help analyse trends and make data-driven decisions quickly. Collaboration between content creators, SEO teams, and technical experts will become increasingly vital to remain competitive

Gemini expansion plans and AI Search Mode

Sundar Pichai confirmed that scaling Gemini on the consumer side will be Google’s top priority for 2025. Gemini will soon support an optional "AI mode" within Search, allowing conversational interactions and file uploads for a more personalised search experience.

We suspect that it’s Google’s intent to make Gemini’s AI-driven interface the default search mode after a period of testing — and that current Gemini integrations with SERP features, such as “Things to Know” and “What People Are Saying,” will expand rapidly.

Sundar mentioned that he expected there to be some back and forth between Google and Open AI in the AI arena — but that with an expedited workflow would see Google produce the best-in-class product.

For publishers…

This means publishers must prepare for a shift in user behaviour and new venues of engagement. Optimising for visibility in answer engine outputs will be crucial – although it’s worth noting that there is a growing overlap between AI Overviews and traditional SERP results.

This suggests a strong SEO strategy will be necessary to boost your organic rank and increase the chances of being mentioned by Google’s AI.

Google SEO

Google says JavaScript could limit visibility in AI-driven search

During a recent episode of the Search Off the Record podcast, Google’s Search Relations team discussed issues surrounding JavaScript on websites and AI crawl bots.

For the uninitiated, JavaScript is a programming language that enables dynamic features on webpages — think push notifications, live countdown clocks, 3D digital property tours, etc.

Google Search Developer Advocate, Martin Splitt, led the conversation, explaining that JavaScript is frequently applied to more tasks than necessary.

Search advocate John Mueller agreed, stating,

“There are lots of people that like these JavaScript frameworks, and they use them for things where JavaScript really makes sense, and they’re like, ‘Why don’t I just use it for everything?’”

The problem the Search Relations team was getting at is that AI crawlers tend to prefer HTML - meaning overusing JavaScript could seriously limit your visibility in AI generated search results.

Collectively, AI crawl bots (i.e., the crawling systems of ChatGPT, Perplexity, Claude, etc.) already perform well over a billion monthly fetches, gaining quickly on Googlebot’s 4.5 billion fetches per month.

This indicates that more and more people are using non-Google AI tools to complete tasks traditionally reserved for standard search engines — so ensuring your site is AI crawl bot friendly is a must.

For publishers…

Ultimately, this means that you need to find a balance between slick, dynamic actions and crawl bot accessibility on your webpages. But before you can do so, it’s crucial that you identify any overtly unnecessary JavaScript use.

By unnecessary, we mean any function that HTML or CSS alone can handle. Some examples include:

- Simple navigation and accordion menus

- Animations that CSS keyframes or transition properties can achieve without JavaScript overhead

- Using jQuery to add a class or handle a click event

Any bloated or inefficient JavaScript code has to go. This is especially true when it comes to key SEO elements of your webpages. For instance, if content headers or body text relies on JavaScript, AI crawlers are going to struggle to process them, and if they don’t know what your content is about, they’re not going to use it in their outputs.

Instead, consider:

- Using HTML to handle all primary content - ensuring AI craw bots understand the core concept of your content.

- Using server-side rendering for all primary content - AI crawl bots cannot execute client-side JavaScript.

- Using incremental static regeneration instead of JavaScript where achievable.

If you work with a developer, communication is key. Make sure they’re aware of the SEO implications of JavaScript-first dev strategies — and work in collaboration to establish a healthy JavaScript to native code ratio.

Insights about Google's ranking signals revealed

Mark Williams-Cook’s presentation at SearchNorwich provided valuable insights into Google’s ranking signals after he gained access to a Google end point.

Key discoveries included:

- Consensus scores:

Google counts the number of passages in content that agree with, contradict, or are neutral compared to the general consensus. They then calculate a consensus score that can determine how you rank for specific queries.

For high consensus queries, such as ‘is the earth round or flat’, Google will place lots of high consensus score content in the top ranks. For more subjective queries, Google intentionally uses a mixture of consensus score pages to deliver more impartial results.

This means, for more subjective queries, you may not rank in 1st place, even with a perfect piece of content if Google already has this angle covered by a good website. Neither will you rank in just below, as Google wants to place something with a different opinion in a highly visible position.

- Site quality scores:

Google also gives a 0-1 site quality score to every website at a subdomain level, applying it to a thresholding system to determine what your content is eligible for in terms of visibility on their SERPs.

For instance, content within any subdomain that scores below 0.4 on the quality scale is not considered for rich feature inclusion.

Site quality score is determined by the following:

- How many times users specifically search for your website either in isolation or alongside other search terms.

- How many people select your website on the SERPs, regardless of position.

- How often your website name/brand appears in anchor text around the web

If there is no or insufficient user data to calculate a quality score for a site, they use a predictive model that compares the site to others that they do have user data on.

For publishers…

Confirmation that Google essentially runs websites through a series of heats to grant access to different levels of exposure highlights the importance of a comprehensive approach to SEO and UX.

For example, you might produce a perfect piece of content — but unless your site’s quality score is upward of 0.4, your content will be out of the running for rich results, severely limiting your visibility compared to competitors who do exceed this threshold.

Focus on building long-term user trust and authority. Positive brand searches, strong CTR, and widespread mentions of your site across the web all feed into your quality score.

Updated policies and FAQs on site reputation abuse

Google added nine FAQs to its site reputation abuse policy, clarifying how flagged content should be handled. Here are the key points:

- Not all third-party content is in violation of Google’s site reputation policy.

- Freelance content is considered third-party — but only violates Google’s spam policy if it attempts to ‘abuse search rankings.’

- Noindexing content in violation of the site reputation abuse policy does not automatically remove a manual action. If you noindex content, it’s best to inform Google by replying to the manual action in Search Console.

- Moving flagged content to another area of the same site or another well established site is not acceptable. Moving it to a new domain with no established reputation to take advantage of is generally okay.

- When you move flagged content, you still need to request reconsideration before the manual action can be removed.

- You should not redirect from the old site to the new domain when moving your flagged content.

- You can link from the old site to the new site, but be sure to use the nofollow attribute for all links on the abused site.

For publishers…

This means managing flagged content with care is critical. In nutshell, any content given a manual action should either be removed from the web or moved to a domain with no established reputation on which to piggyback.

And, whichever you go with, remember to keep Google informed so they can remove the manual action against your name.

Ranking for branded keywords doesn't happen automatically

For those outside the SEO bubble, it might seem logical that if a user searches for your business name in Google — that your business be near the top of Google’s first page.

However, as stated by Google’s John Mueller in response to a recent question on this topic,

‘This is a competitive space… just because you call your company something doesn’t mean you’ll show up on top of search like that.’

For publishers…

Like any non-branded search term, gaining visibility at the top of the SERPs for branded keywords involves carefully planned SEO.

Granted, unique business names face less competition for the top spots, but even then, a lack of targeted SEO can slow your ascent.

Struggling to rank for branded keywords? Let’s talk.

Dip in AI Overviews for finance and health queries

December saw a significant decline in AI Overviews appearing for search terms related to two verticals: finance and health — falling from 25% to 19% and 60 to 50% respectively.

This may be a sign Google is concerned that AI Overviews is getting a too involved with YMYH (your money, your life) queries — and that they want to limit potential for controversies leading up to the release of AI Mode.

For publishers in these verticals…

If limited exposure in Google’s generated results continues for these verticals, it will increase competition significantly as brands vie for finite mentions in AI Mode.

To prepare, publishers in finance and health should focus on strengthening their website authority and user trust. Create high-quality, accurate content that aligns with Google's E-A-T (Expertise, Authoritativeness, Trustworthiness) guidelines. As a high organic rank on Google's SERPs can improve your odds for inclusion in Gemini's outputs, SEO should remain a top priority.

New Business Hours options in Google Business Profiles

Google has added several more business hours options to Business Profiles, helping you to give users more accurate and valuable information.

Specifically, the new hours categories relate to religion and restaurants:

Restaurants:

- Dinner

- Lunch

- Breakfast

- Happy hours

- Kitchen

- Online service hours

Religion:

- Prayer

- Adoration

- Confession

- Jummah

- Mass

- Sabbath

- Worship service

For business owners…

We recommend using any of the new options that apply to your business. The more helpful information you can include on your Business Profile, the more visibility Google is likely to grant it.

That said, with AI Overviews cannibalising information from Business Profiles, this visibility may not always involve your profile itself. Find out more about this in our recent update on AI Overviews and GBP reporting.

If you’ve been hit with a GBP video verification request by Google, follow our step-by-step video verification guide to get your account back in action.

Stay current with TDMP

With Google gearing up for the release of its first full AI Search Mode, the search industry is on the cusp of seismic changes. At TDMP, we’re here to help publishers stay ahead of the curve. Contact us today for comprehensive digital marketing support.